Blog > Gradient Ascent to Robust Security at EY using Snowflake Snowpark

Gradient Ascent to Robust Security at EY using Snowflake Snowpark

Leading an ML SIEM project for Big 4 consulting

1,317 words, ~ 7 min read

internship

Last month marked the end of my role at EY, where I led a cybersecurity project leveraging machine learning using Snowflake Snowpark. This post will cover my experience working at EY and some reflections of my capabilities throughout.

legal note: all information in this is either public information (available to anyone online) or my personal opinion. there is nothing confidential or proprietary. I still like to adhere to the NDAs that I sign.

Table of Contents

What is EY and SIEM?

The Big 4 and the Big 3

Consulting brings to mind classic management consulting: teams of people working with clients to solve business problems, making a lot of slide decks and traveling to client sites. The Big 3 here are not rappers; they are McKinsey & Company, Boston Consulting Group, and Bain & Company, known for their prestige and high salaries.

The other side of professional services are audit, assurance, tax, and other related services: the Big 41. These are Deloitte, PricewaterhouseCoopers (PwC), Ernst & Young (EY), and KPMG. Interestingly, these firms are also known for their consulting services - for over a decade, they have been growing their consulting practices. Some of this is via acquisition; one notable one is PwC's acquisition of Booz Allen Hamilton, rebranded into Strategy&. Edwin G. Booz, the founder of Booz Allen Hamilton, is considered the father of management consulting: his theory was that companies would do better if they could get impartial, third-party advice on their operations.

The consulting arms of the Big 4 often a lot of services; one of those for EY is cybersecurity. This is where the project I worked on comes in: Security Information and Event Management (SIEM).

SIEM

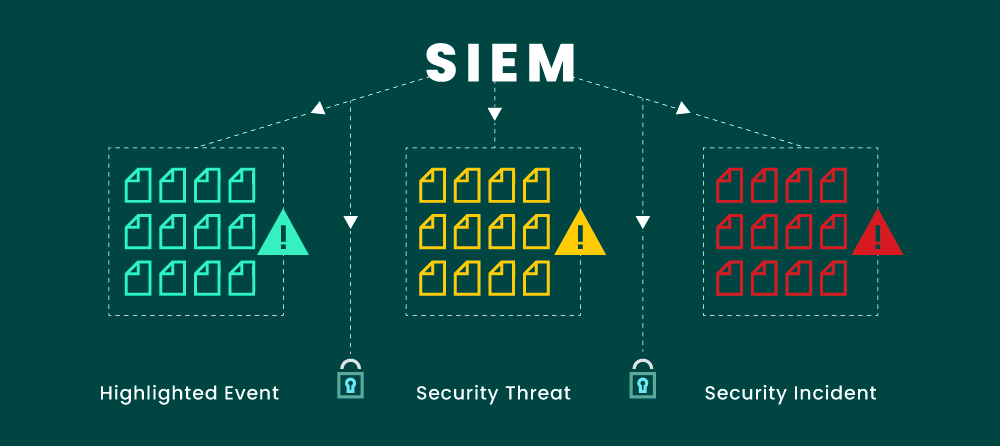

Security Information and Event Management refers to tools that provide real-time analysis of security alerts generated by applications and network hardware.

Machine learning can be applied to SIEMs to improve their detection capabilities across a variety of threats (EY has written more about this, i.e. here and here). Our project was building some of these capabilities out.

The Project

Consulting clubs in college have been growing rapidly. The idea is simple: get a bunch of smart students together and offer services to companies looking to recruit and have projects done. I found an article that seems to discuss some of this with a negative outlook, though it's paywalled so it's just a guess.

I joined a consulting club at Berkeley, UpSync, my freshman year. Over the years, their methods of recruiting and project selection have evolved. My last semester of college, they secured this project with EY.

I had been helping out on the technical side as needed, including with the original project pitches. As time went by, I took the opportunity to lead the project. It was a really formal experience; I had an EY email (aniruth.narayanan@ey.com), access to internal systems and Teams, and a lot of responsibility. In fact, I was even on the payroll (for a donation to the club).

I can't elaborate much on the finer details of the project, but we did use the Snowpark platform. I've included some generic technical details below about Snowpark because I think it's interesting, but feel free to skip to the next section.

Snowflake and Snowpark Machine Learning

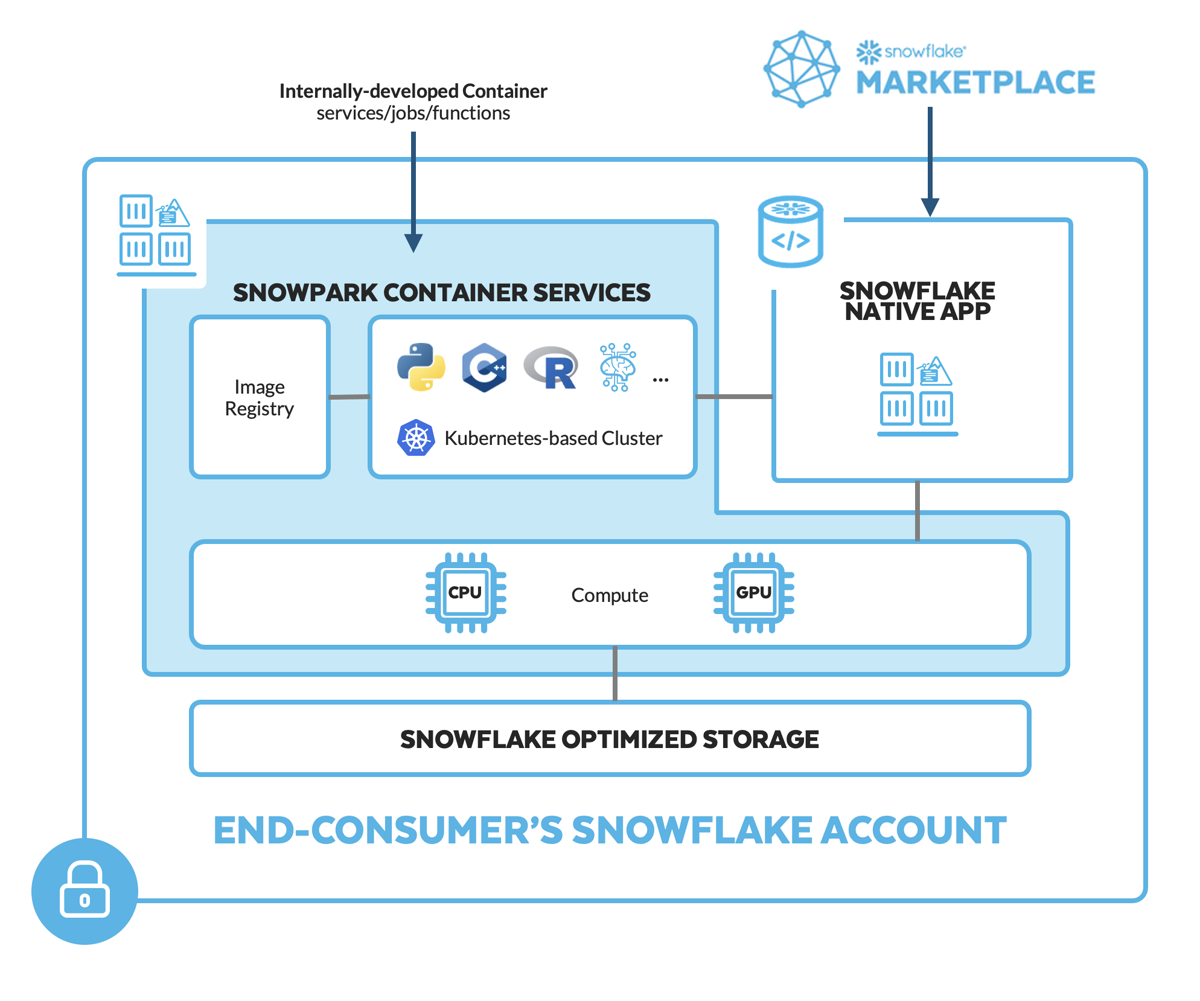

Snowflake is a cloud-based data platform that provides a data warehouse as a service for the cloud. Compute to process data for relevant querying tasks operates on a separate layer.

Services, jobs, and functions all can run within Snowflake in connection with data to power applications.

Snowpark is a managed service to run and scale workloads easily, avoiding management of infrastructure. On top of this is Snowpark ML, which allows for building machine learning models in conjunction with data already stored in Snowflake.

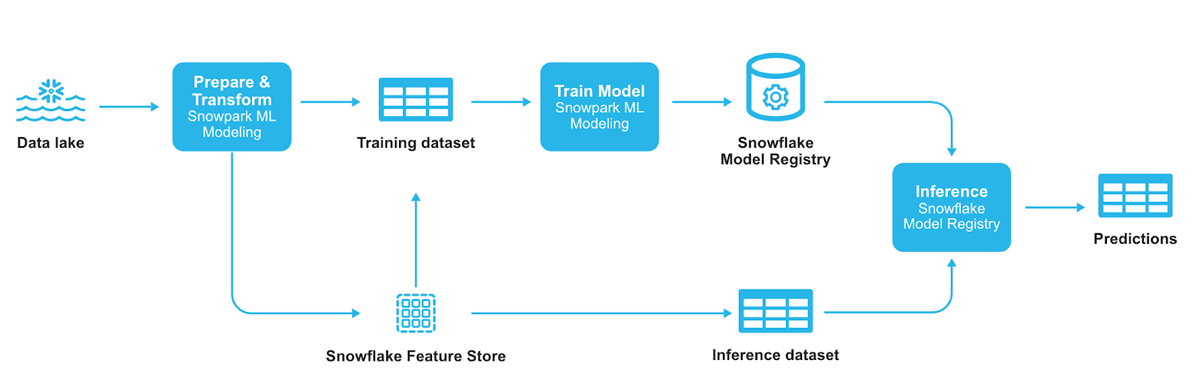

There are two components to production machine learning (in this context2):

- Training

- Inference

The first component is the learning component: creating a model based on input data. The second component is the prediction component: using the previously trained model to make predictions on new data.

The diagram shows the flow of tasks in the pipeline. The training component is up to the model registry, where the trained model is saved as an object similar to the tabular data. The inference component is using the test inference dataset and predicting by querying the relevant model endpoint from the Model Registry.

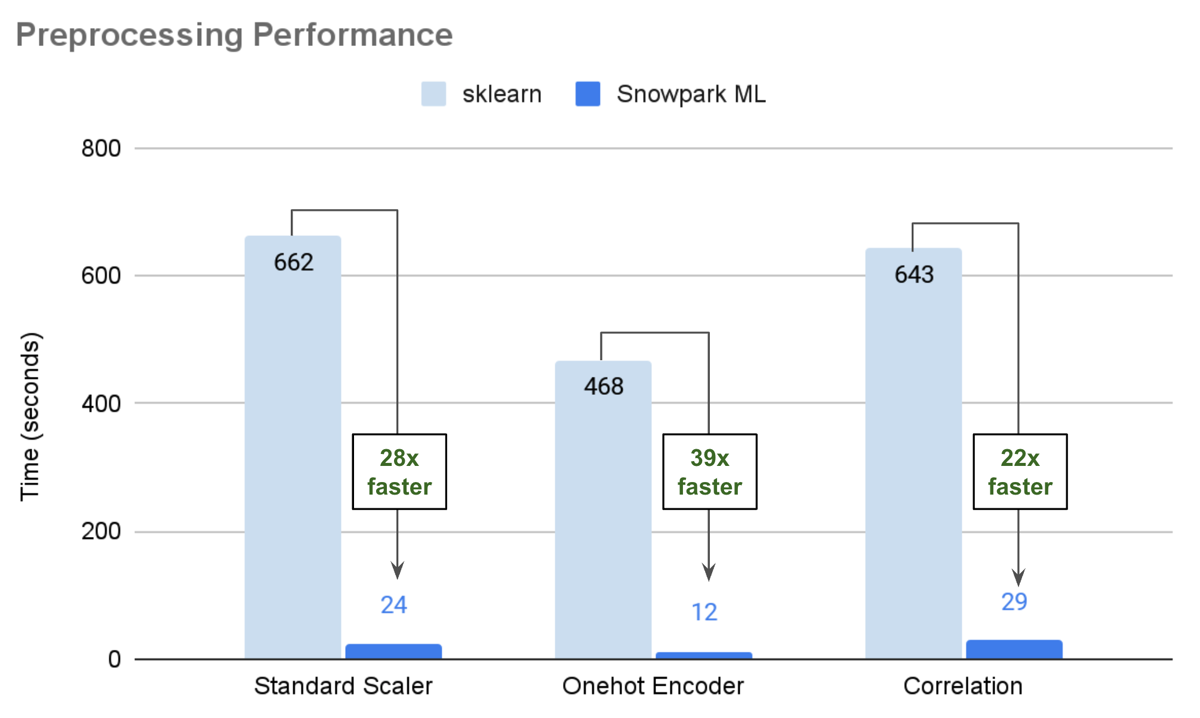

Here's an interesting question: why use Snowpark ML for this? The answer is that it's distributed. This means that the training and inference components can be run across multiple nodes, which can significantly speed up the process.

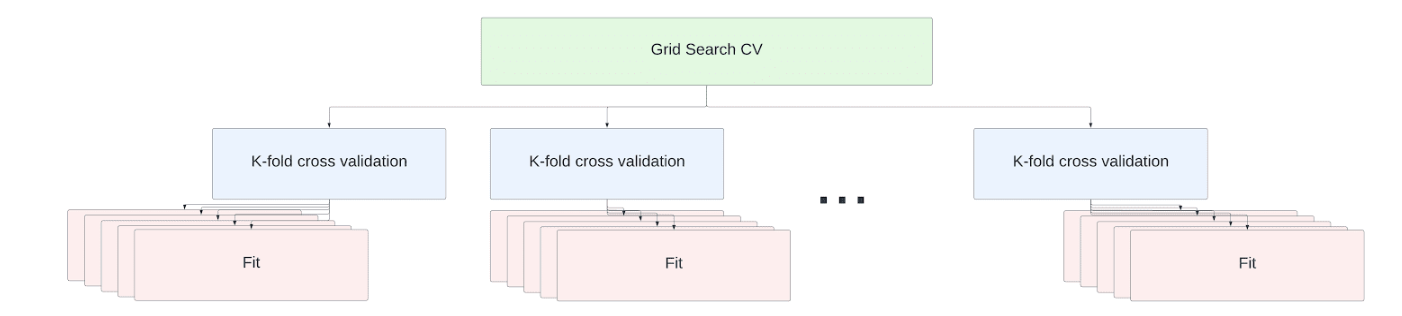

Grid search is a type of hyperparameter tuning; finding the right knobs to turn to get the best model. By leveraging distributed computing, these operations can be split and run in parallel for much higher performance.

Here's a graph published by snowflake on their docs showing the performance boosts over sklearn, a local machine learning model with the same libraries. The distributed version is significantly faster.

Final Presentation

Senior EY partners came to San Francisco for RSA, a major security conference in San Francisco. We presented to them in person about our work. It was a cool experience, and the questions they asked showed how they thought about bigger picture issues.

Takeaways

Consulting for Consulting

Usually, consulting clubs don't work with consulting firms. Clients are typically "regular" companies, like IKEA or Nvidia. Those projects typically involve market research, strategy decisions, or technical MVPs.

Being inside a consulting firm meant that we saw how things worked from the inside. For one, the slide decks were a lot more detailed, but the level of detail and attention was higher.

I also got the opportunity to talk to a lot of people at EY. At its core, consulting to me is about problem-solving, and I learned a lot about how to approach problems from different angles.

Leadership

I was the lead as the project executive, which was a new experience for me at this scale. I had to manage a team of 2 project managers and 6 consultants. On previous internships, I was responsible for my own work only. During calls, EY partners would directly ask me questions about intricacies and details. I had to be on top of everything, yet delegate tasks as needed.

As a nice parallel, I had been taking a class on leadership, UGBA 105. It was interesting to see theory in practice - norm-setting, motivation, and conflict resolution strategies.

Systems and Machine Learning

Lastly, this project confirmed my interest in systems and machine learning. I've previously interned at a number of companies in these areas, and I've enjoyed the work I've done. I've worked in a lot of areas, and this was yet another one that I found interesting.

The title of this post is a reference to an algorithm called gradient descent, which is used in machine learning to optimize models by stepping closer to a local minimum (reversed, it becomes gradient ascent, stepping closer to a local maximum). The idea is that by taking small steps in the right direction, you can reach a good solution.

Footnotes

Found this interesting? Subscribe to get email updates for new posts.

First Name

Last Name

Previous Post

Principal Component Analysis on Business MarketsNext Post

Open Source, Market Dominance